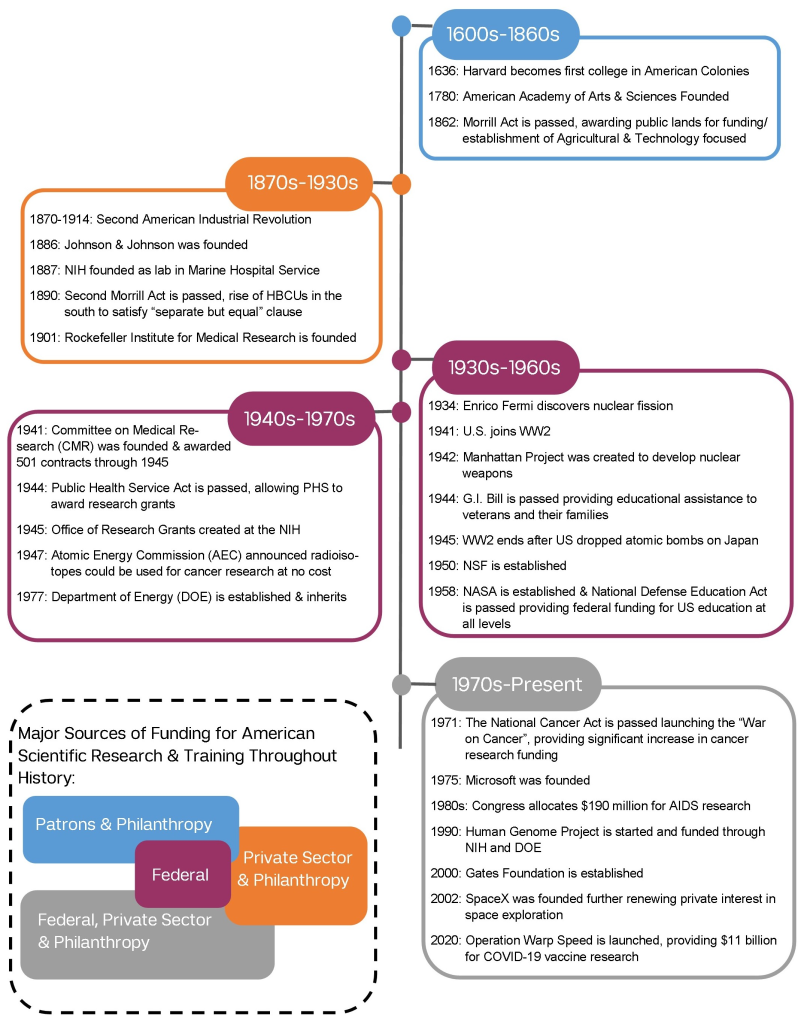

Scientific research is and always has been deeply entangled with politics, culture, and the broader currents of society. Like most human endeavors, doing science requires materials and manpower, both of which come with a price tag. Thus, funding becomes one of the most direct and potent forces influencing not just what kind of science gets done, but also who gets to do it, and, consequently, who reaps the rewards of the discoveries. Here, we explore some major milestones that drove the evolution of research funding in the United States—from the passions of gentleman-philosophers and philanthropic benefactors to the budget battles and policy debates echoing through the halls of Congress.

1600s–1860s: Hobby Science and Industrialization

In the early days of the American colonies, science was less a profession and more a refined pastime of wealthy philosophers, educated nobles, and civic or clergy leaders. To many of them, scientific inquiry was similar to an artistic or philosophical venture carried out for cultural prestige or civic virtue. But these early amateur scientists also viewed science as a noble intellectual pursuit, drawing inspiration from Enlightenment ideals of reason and empirical inquiry as the bedrock of civilized society and a driver of societal progress. Modeled after their European counterparts, various scientific societies like the American Philosophical Society (1743) and the American Academy of Arts and Sciences (1780) were established as venues for scientific correspondence, exchange of ideas, debate, and publication. Society memberships were highly prestigious and often limited to the social elite. Their proceedings were largely funded by the members’ dues and personal fortunes, or by the patronage of wealthy benefactors seeking fame and legacy.

While some major educational institutions, such as Harvard College and Yale College, were established during this period, their primary mission was to train religious and civic leaders. Their curricula focused on subjects like theology, language, and morality, with little to no emphasis on scientific teaching and inquiry. Even when professors did pursue scientific inquiry—for example, Harvard professor John Winthrop’s 1761 expedition to observe the transit of Venus—they were motivated by intellectual curiosity, not by any formal responsibility to run a research program. Such undertakings were often funded partly by the personal fortunes of the researcher and partly by the university’s budget or external donors, far from the modern style of funding through research grants. Even religious organizations sometimes funded what we now call scientific inquiries, which fell under the umbrella of natural philosophy and were seen as a step towards understanding God’s creations.

Many young Americans in the nineteenth century pursued higher education in Europe, where the first research universities were taking shape. Institutions like the Humboldt University of Berlin, founded in 1810, intertwined lectures with research and were funded through government support, first from Prussia and subsequently from the German Republic. This concept was emulated in American institutions such as Johns Hopkins University, founded in 1876 for the explicit purpose of “establish[ing] a hospital and affiliated training college,” and MIT, founded in 1861 as a new type of educational institution focused on science, technology, and their practical application. Eventually, institutions such as Harvard and Yale also evolved beyond their origins as theological seminaries and into their current forms as modern research universities.

As the U.S. started to shift from an agrarian to a more industrialized society during the nineteenth century, the demand for workers with technical expertise grew, leading to a push for more universities and colleges to offer relevant courses. This need was met by the U.S. government through the passing of the Morrill Act in 1862, wherein states were awarded public lands to finance existing colleges or charter new institutions with agricultural and mechanical studies. The act laid the foundation for the state college and university systems that exist to this day and made higher education more accessible to white Americans. After the American Civil War, the Second Morrill Act of 1890 required states with segregated higher education systems to offer Black students admission to land-grant institutions or an alternative. This significantly expanded higher educational opportunities for Black students, who had previously been barred from all but a few colleges in the northern U.S., and a system of Historically Black Colleges and Universities (HBCUs) was established in Southern states to satisfy the “separate but equal” clause of the act.

1870s–1930s: Innovation and Inventions During the Gilded Age

The significance of the Second Scientific Revolution was evident in the wealthy circles of American society. The discovery of oil in Pennsylvania and subsequent refining in the 1850s spurred the Gilded Age, which was riddled with wealth inequality as a small number of industrial titans rose above the average working American. Individuals such as John D. Rockefeller, who had amassed substantial wealth through the Standard Oil Company, became important patrons for science and research. Through the Rockefeller Foundation, he donated more than $500 million (equivalent to billions in today’s dollars) and supported many prominent biomedical research institutions, including the University of Chicago and the Rockefeller Institute for Medical Research, which in 1901 became the first biomedical research center in the United States. This was instrumental for the advancement of the American biomedical enterprise, as the country now had an institution to rival European hubs of scientific discovery like the Koch and Pasteur Institutes.

Many key inventions and innovations during the Gilded Age opened new funding avenues for scientific research. Prominent inventors during this period, such as Alexander Graham Bell, Robert Wood Johnson, Henry Ford, and Thomas Edison, were either self-funded or relied on friends and family members at early stages of their careers. Later, these men reinvested profits from previous inventions to further research and development efforts at their respective companies, establishing private labs and hiring scientists and engineers. In some instances, innovators and inventors (and their families) funded science outside of company walls by creating philanthropic foundations, many of which provide support for scientific research and academic institutions even today.

In addition to private investment, the government also commissioned various research and regulatory institutions to address the growing needs of American society. The National Institutes of Health (NIH) was originally founded in 1887 as part of the Marine Hospital Service and later evolved to become a powerhouse for biomedical research. Similarly, the U.S. Department of Agriculture (USDA), founded in 1862, played a key role in supporting agricultural science through educational programs and research initiatives. During the Great Depression, the number of roles at such institutions was increased as part of New Deal programs, which focused on providing employment opportunities amidst the economic collapse. President Franklin Roosevelt was instrumental in advocating for polio research, a public health crisis at the time, and founded the National Foundation of Infantile Research (now March of Dimes) in 1938. This foundation went on to fund Dr. Salk’s vaccine trials in 1954, leading to mass production of the polio vaccine. Overall, however, financial support for research from federal agencies was still meager compared to today’s funding structures, and many of these agencies existed mainly as support structures for the U.S. military.

1930s–60s: Wartime Support for STEM

While the advent of American research universities during the Second Industrial Revolution had begun bridging the gap between research funding and government policy, the two became inextricably linked during and due to one of the most transformational periods in U.S. history: the Second World War (WWII). By then, scientific inquiry had gained widespread recognition as a driver of societal development but was still viewed as fuel for slow, long-term progress. Funding scientific research ranked low on the federal government’s priority list and was largely the purview of local governments or private patrons. But developments in nuclear physics in the first half of the twentieth century flipped this worldview on its head, placing science at the center stage of politics and international relations and catapulting scientific funding to a top-priority policy issue for the federal government.

When Enrico Fermi discovered in 1934 that bombarding neutrons onto uranium induced radioactivity, it marked a turning point in physics—nuclear fission was suddenly a tangible reality. Now in the midst of WWII, almost every physicist in America immediately recognized the frightening possibility of this reaction being used to make a bomb with hitherto unimagined explosive power. This was accompanied by the graver realization that the Nazis must have arrived at the same conclusion. Alarmed by the prospect of the Nazis acquiring a nuclear weapon, physicists urged the U.S. federal government to consider launching its own nuclear weapons program. The government felt it had no choice but to pour massive amounts of funding, infrastructure, and manpower into the bomb-building operation codenamed the Manhattan Project.

Governments around the world quickly realized that the key advantage in WWII was the sophistication of one’s technology, even more so than the size and prowess of one’s military. To address this need, the U.S. government established the National Defense Research Committee (NDRC), chaired by MIT engineer Vannevar Bush, which served as the government’s official conduit to research scientists and engineers. Soon, Bush convinced President Roosevelt to expand the NDRC to the even more powerful Office of Scientific Research and Development (OSRD), which oversaw the establishment of contracts worth billions of dollars between the U.S. military and various research universities across the nation. Apart from the Manhattan Project, the OSRD funded many other wartime science projects, such as the radar research division at the MIT “Rad Labs” to counter German U-boats. In this symbiotic arrangement, the government received scientific support for upgrading its military, while universities received financial resources, reinvigorating their research enterprises. It was the first time in U.S. history that scientists and engineers had worked so directly with the U.S. government, and it permanently changed the relationship between the government and the country’s scientific research enterprise.

Near the end of the war, Bush—then director of the OSRD—authored a highly influential report for President Roosevelt titled Science, the Endless Frontier. In it, building on the government’s shifting attitude toward science and technology, he crafted a landmark argument regarding the necessity of scientific progress for the nation’s health, security, and prosperity and highlighted the government’s permanent responsibility to support scientific research, even during peacetime. Bush’s vision shaped the U.S. government’s science policy for many decades to come and resulted in the establishment of the National Science Foundation (NSF) in 1950—a federal agency dedicated to funding basic, curiosity-driven research, guided by scientists and housed in universities, ostensibly free from the constraints of fulfilling immediate national interests.

As WWII gave way to the Cold War, science and technology research stayed at the top of the government’s policy priorities. In 1957, the launch of Sputnik sparked widespread alarm in the United States over a perceived loss of technological superiority. This anxiety ignited a flurry of activity within policy and research circles, marking the beginning of what became known as the space race. Capitalizing on this public sentiment, President Eisenhower passed several landmark legislations on science and research funding in 1958. This included establishing the research wing of the U.S. Department of Defense (DARPA), creating NASA, and passing the National Defense Education Act (NDEA), which was the first legislation apportioning federal budget to support higher education and research since the Morrill Land Grant Acts. Other policies increased enrollment of the country’s youth in higher education and research programs—for example, the G.I. bill, which supported education for young WWII veterans. Overall, between the start of WWII and the peak of the Cold War frenzy, federal funding for scientific research increased by a dramatic twenty-five-fold, paving the way for later science-government relations.

1940s–70s: Biomedical Research in the Postwar Era

Government support for science was not limited to the physical sciences, as an increasing need for funding biomedical research was becoming apparent. Before the 1940s, the majority of biomedical research was funded by companies or philanthropic foundations, and federal agencies, such as the Public Health Service (PHS), conducted their own research. But the demands of WWII drove federal support for extramural biomedical research. The Committee on Medical Research (CMR), housed under the OSRD, funded external research through contracts for projects that addressed the health needs of soldiers, including the development of antibiotics and injury treatments. From 1941 to 1945, the CMR approved 501 projects for funding, totaling about $400 million (adjusted for inflation). After the war, the PHS took over the remaining open contracts and laid the foundation for the NIH’s extramural research program.

The CMR was not the only wartime agency that supported external research. After WWII, the Atomic Energy Commission (AEC), which oversaw the management and development of nuclear energy, allocated funding toward external biomedical research aimed at studying the effects of radiation on humans. In 1947, the AEC announced that radioisotopes could be used without cost for cancer research and treatment. This peacetime program led to the funding of cancer research centers and projects. When Congress dissolved the AEC in the early 1970s, the Department of Energy inherited the AEC’s cancer research initiatives, which continue to fund projects related to radiation therapies.

Though many federal funding structures for biomedical research were established in response to WWII, they remained in place once the war ended. In 1944, President Roosevelt signed the Public Health Service Act, which allowed the PHS to award grants to universities, institutions, and individual researchers. In response, the Office of Research Grants was created at the NIH in 1945. Within a year of the office’s creation, the NIH funded 264 extramural research grants, totaling over $55 million (inflation-adjusted). The funds available for NIH extramural grants increased exponentially as more institutes were added under the NIH umbrella. In the 2024 fiscal year alone, the NIH awarded over $37 billion in extramural research funding—about 79% of the agency’s total spending.

1970s–Present: Private Interest and Politicized Science Funding

The fruits of early investments in biomedical science became apparent in the twenty-first century with landmark achievements such as the Human Genome Project. Initially beginning as a collaboration between the U.S. Department of Energy and the NIH, it quickly became a global project, and the consequent boom in genomics-based research and businesses provided thousands of jobs and financial returns.

The expansion of federal support for biomedical research in the postwar era also paved the way for the politicization of science. As federal funding became the backbone of research, presidents and lawmakers exerted greater influence over scientific priorities. For example, President Nixon’s “War on Cancer” poured billions of dollars of federal funding into basic science research through the expansion of the NIH and other avenues. During the AIDS epidemic of the 1980s, President Reagan publicly announced that AIDS was a “top priority” for his administration, and that same year, Congress allocated $190 million for AIDS research. More recently, in response to the COVID-19 pandemic, President Trump launched Operation Warp Speed, an $11 billion federal initiative aimed at rapid vaccine testing, development, and deployment.

However, the government’s power to influence science through funding allocation cuts both ways. The Bush administration restricted federal funding for embryonic stem cell research in the early 2000s, limiting progress in a promising field and driving researchers to seek private funding for the continuation of their work. Similarly, politically motivated cuts to environmental science research—or, as seen earlier this year, NIH research—highlight the fragility of relying on federal dollars as a major source of scientific funding.

Fluctuations in federal research funding have also stimulated a resurgence of philanthropic and private investments in scientific research. As during the Gilded Age, the tech boom has ushered in a new era of uber-wealthy individuals, such as Bill Gates, Elon Musk, and Jeff Bezos, who often invest significantly in research programs. In 2000, Microsoft founder Gates established the Gates Foundation, which funds various research initiatives and academic endeavors, particularly those in public health and technology-related fields. Bezos, who found success through the massive Amazon marketplace, has reportedly funded AI and environmental engineering initiatives. Both Musk and Bezos have renewed interest in space exploration via their companies SpaceX and Blue Origin (respectively), signaling a shift toward private funding of scientific innovation in areas that were once dominated by federal agencies. While these private efforts can accelerate innovation, they are shaped by donors’ personal interests and raise questions about transparency and public benefit.

Funding for industry research has also increased significantly, particularly in the pharmaceutical and biotech sectors. Pharmaceutical companies have enjoyed increased profits as a result of advances in mass production techniques and heightened demand for antibiotics, vaccines, and other therapeutics. This success has brought in more capital for further industry R&D, leading to increased investment in academia-industry joint projects like expensive drug trials. While this funding framework fuels rapid innovation, it has also introduced new ethical and regulatory challenges surrounding data ownership, publication bias, and conflicts of interest.

Despite the growth of alternative funding structures, federal funding remains the cornerstone of scientific research in the United States. But this support is fragile. As the current presidential administration freezes funding and slashes budgets for federal science organizations, many individuals and institutions are seeking financial relief through private sector and philanthropic funding, while others are considering leaving the U.S. entirely.

The scientific research boom we witnessed during WWII and in the decades after was largely thanks to robust federal funding. Though today’s attempts to limit the government’s investment in science are framed as a way to improve the country’s fiscal health, this logic ignores the fact that our system of federal support for research emerged from the idea that scientific innovation is critical to U.S. economic and military dominance. Can our government truly trade scientific progress for a healthier economy, or, in defunding research, will it lose both?